Command And Conquer: Test Strategy Plan for Enterprises

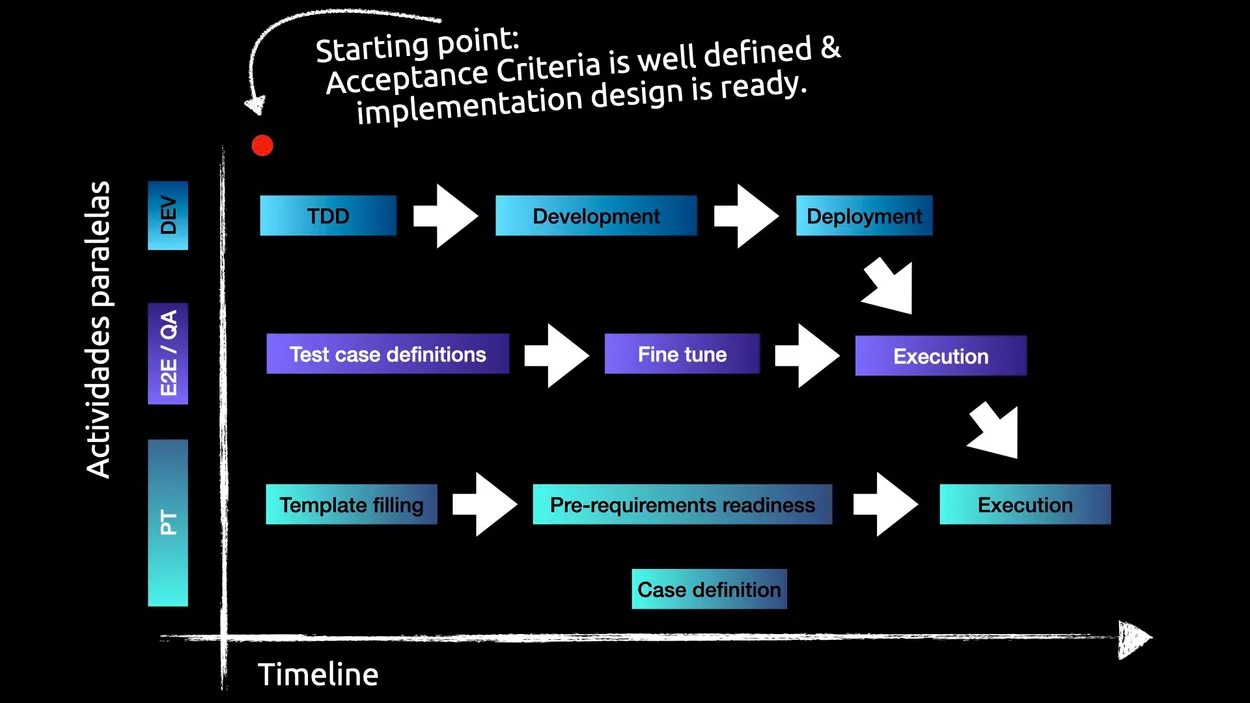

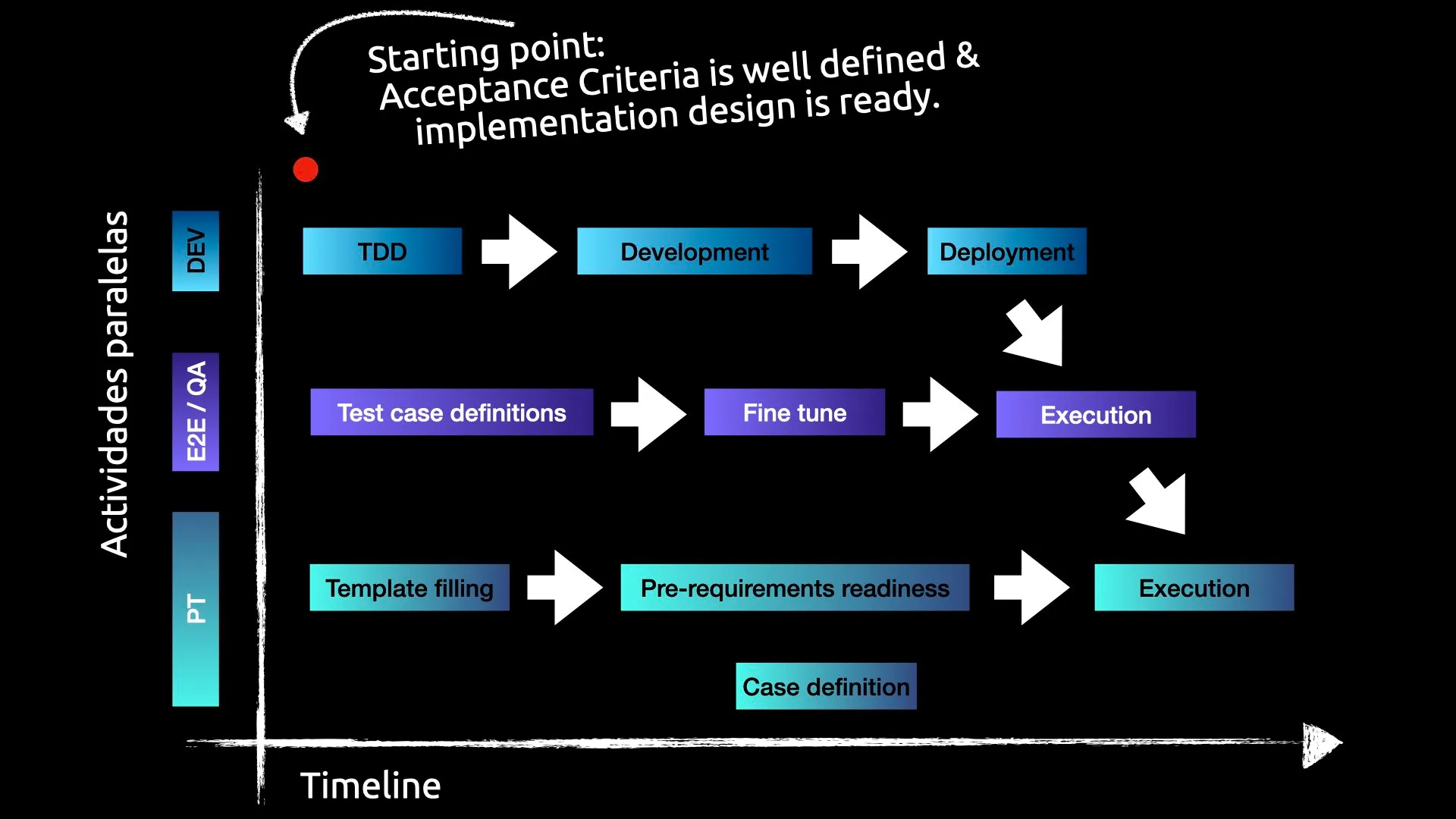

Parallel tracks and transparency that start on early stages of development.

1. Introduction

In today’s complex software landscape, delivering high-quality, resilient, and performant applications is not a luxury—it’s a requirement. This strategy moves away from a traditional model where testing is a final, separate phase. Instead, it integrates quality assurance into every stage of the software development lifecycle.

This document outlines a proactive approach designed to “Command and Conquer” software complexity. This is achieved by embedding a parallel multi-layered testing strategy throughout the entire process—from initial planning and design, through development and integration, to final deployment and monitoring. By considering quality from the outset, we build confidence incrementally and ensure our systems are robust from the individual component to the end-user experience.

The core of our methodology is parallel test development, where unit tests, integration tests, and end-to-end tests are all initiated from the moment the acceptance criteria are defined. This approach promotes transparency, collaboration, and early detection of both system failures and test design issues. It’s particularly valuable when team members aren’t familiar with all applications involved, as it ensures knowledge sharing happens continuously throughout the development process.

2. Parallel Multi-Layer Testing Strategy

Our testing strategy consists of three complementary layers that can work in parallel from the very beginning of development. Each layer serves a specific purpose and is equally critical to ensuring software quality:

- Unit Tests: Verify individual units of code work correctly in isolation.

- Can start the moment business accesptance criteria are defined.

- Integration Tests: Ensure different modules and services interact properly.

- Can start as soon as cloud infrastructure and service contracts are defined.

- End-to-End Tests: Validate complete user journeys and business flows across the entire system.

- Can start the moment the acceptance criteria are defined - for the planning phase, even before development begins. For execution, they require a stable environment but event so, they can be developed in parallel.

3. Layer 1: Unit Tests

Objective: To verify that individual, isolated units of code (e.g., functions, methods, or components) work correctly. This is our first line of defense against bugs.

Scope:

- The smallest testable part of an application.

- All dependencies (like databases or external APIs) are mocked or stubbed.

- Focus on business logic, edge cases, and algorithmic correctness within a single unit.

Key Characteristics:

- Fast: A full suite should run in seconds or a few minutes.

- Isolated: A failing test points directly to the specific unit of code that is broken.

- Developer-Owned: Written by the same developers who write the feature code, often in a Test-Driven Development (TDD) fashion.

Recommended Tools:

- JavaScript/TypeScript: Jest, Vitest

- Java: JUnit, Mockito

- .NET: NUnit, xUnit

- Go: Go testing package, Testify

- Python: unittest, pytest

Ownership: Development Teams.

Success Criteria:

- A high level of meaningful code coverage (target: >80%).

- Code quality metrics (e.g., cyclomatic complexity, duplication) meet defined thresholds.

- All unit tests must pass in the CI/CD pipeline before a code merge is allowed.

Deliverables:

- A comprehensive suite of unit tests covering all new and modified code. This are located alongside the production code, ensuring they are maintained and updated as the code evolves.

4. Layer 2: Integration Tests

Objective: To ensure that different modules, services, or components interact correctly. These tests verify the “plumbing” of the application.

Scope:

- Interactions between services (e.g., API-to-API communication).

- Communication with external systems like databases, caches, and message queues.

- Verification of APIs, authentication systems, and health checks.

Key Characteristics:

- Slower than unit tests as they involve network communication and running services.

Recommended Tools:

- API Testing: Postman (with Newman for automation), Supertest

- Environment Management: Testcontainers, Docker Compose

Ownership: CloudOps / DevOps team, with support from development teams.

Success Criteria:

- Successful data flow and communication between integrated services.

Deliverables:

- The confirmation that key services and components work together as expected.

5. Layer 3: Performance Tests

Objective: To validate non-functional requirements, ensuring the application is fast, scalable, and stable under expected load conditions. Scope:

- Critical user journeys and business-critical API endpoints.

- Infrastructure capacity and breaking points.

- System behavior over extended periods (endurance testing).

Methodology: All performance tests must be preceded by a formal Performance Test Plan, which standardizes the process. This plan answers four key questions:

- WHO: Who is responsible for defining, executing, and analyzing the test? This ensures clear ownership and responsibility. Additionally, a backup resource can be defined in case the primary tester is unavailable, as well as those responsible for validating and approving the results.

- WHY: What is the primary driver for this test? Understanding the reason (e.g., a new feature launch, preparation for a high-traffic event like Black Friday, or investigating a production slowdown) helps highlight dependencies and indicates if other teams or applications might also need to conduct performance tests.

- USE CASES: What real-world, end-user functionalities should this performance test cover? This helps others understand the context and implications of the test results.

- WHAT: Which services, APIs, and infrastructure components are in scope?

- WHEN: Planned date for the test to be executed.

- HOW: What is the test methodology (load, stress, endurance)? How will data be prepared and authentication handled? And most importantly: How will the test results be monitored and collected? API response times and status codes can be all green on standard apis, but when there are asynchronous processes, queues, or background jobs involved, the real results of a performance test can be hidding behind the scenes.

- HOW MUCH: What are the expected load profiles (normal vs. peak)? What are the specific, measurable Service Level Objectives (SLOs) that define success (e.g., p95 response time < 500ms, error rate < 0.1%)?

- RESULTS: Once the test is complete, the results should be documented and compared to the defined SLOs. Any deviations should be investigated and addressed, and a determination should be made as to whether the test was successful, unsuccessful, or if retesting is required.

Refer to Appendix A for the detailed Performance Test Plan Template that can be completed before initiating any performance test.

Recommended Tools:

- Load Generation: k6, Gatling, JMeter, Artillery

- Monitoring: Datadog, Grafana, New Relic, your cloud provider monitoring tools and insights services.

Ownership: Performance Engineering / SRE / DevOps Teams, in collaboration with Development Teams.

Success Criteria:

- The system must meet all defined SLOs under the specified peak load scenario.

- No memory leaks or performance degradation are observed during endurance tests.

Deliverables:

- The completed Performance Test Plan template.

- Performance test scripts versioned in a repository accessible to the entire organization.

- A comprehensive performance test report documenting, based on the template, that the tests have indeed been conducted according to the defined criteria, and the test results compared against the SLOs.

6. Layer 4: End-to-End (E2E) Tests

Objective: To simulate real user scenarios from start to finish, validating the entire application workflow across the UI and all backend services. This provides the highest level of confidence that the system as a whole works for the end user.

Scope:

- Critical business flows (e.g., user registration and login, search-to-checkout process, report generation).

- User interactions across multiple pages and components.

Key Characteristics:

- The most comprehensive type of test.

- The slowest and most fragile due to their dependency on a fully deployed, integrated environment.

- Should be reserved for the most critical “happy path” scenarios.

Recommended Tool:

To mitigate the inherent complexity and brittleness of E2E tests, our standard is @lab34/flows. This modern framework is chosen for its focus on creating readable, maintainable, and powerful tests that truly represent user journeys.

Benefits of @lab34/flows:

- Declarative Syntax: Tests are written in a way that clearly describes the user’s intent, making them easy to understand for both technical and non-technical stakeholders.

- Robust Orchestration: Simplifies complex interactions, like managing authentication, handling asynchronous operations, and chaining multiple API calls and UI actions.

- Maintainability: By focusing on flows rather than individual UI selectors, tests are more resilient to minor changes in the application’s UI.

Ownership: All ICT team members, from designers, developers and QA / Automation Engineering Teams.

Success Criteria:

- All critical user journeys pass successfully in a production-like environment before a release.

- Tests are run on a regular schedule (e.g., nightly builds) to catch regressions early.

Deliverables:

- A suite of E2E tests covering all critical user flows, written using the @lab34/flows framework, ensuring they are readable and maintainable. These tests should be stored in a repository accessible to the entire organization.

- An E2E test results report detailing the tests run, results, and any issues found.

- Screenshots, if applicable, to document the execution.

Implementation Timeline for all parallel activities

As a starting point, we recommend that all layers begin in parallel as soon as the business acceptance criteria and implementation guidelines are defined.

Once these requirements are well defined, TDD, the definition of e2e test cases, and the creation of the performance test plan template (if applicable) can begin immediately.

The E2E team is not expected to have a complete suite, as during development, programmers may discover additional checks that need to be covered. Therefore, e2e testing should be overseen by the development team, although this does not mean that the QA team cannot begin defining it earlier.

The same applies to performance testing. The performance test plan template should be initiated before the code is deployed, and as with e2e testing, the development team should oversee the definition of the endpoints and flows to be tested, as additional endpoints that need to be covered may be discovered during development (and this can help identify prerequisites).

In short, if both the use cases and performance test definitions, as well as the knowledge of how to execute them, are shared (in repositories accessible to all teams):

- Dependency bottlenecks between teams are eliminated.

- There is a broader and more comprehensive view of the requirements and checks that must be covered.

- A culture of collaboration, ownership, and shared responsibility is fostered, as everyone can review, contribute, and learn from the tests.

More Information On How To Implement

We work hand by hand with our customers to implement this and other strategies effectively. If you want to know more about how to implement this strategy in your organization, please contact us at hola@lab34.es.

We have top-notch profiles, from senior developers to architects and ex-apple QA testers that can help you implement testing and other strategies in your organization.

Bonus: Performance Test Plan Template

This template serves as an official record for defining the scope, methodology, and success criteria for performance tests. Its purpose is to standardize and ensure the consistency and traceability of the tests.

Putting this document in a place where all stakeholders can access it (e.g., a shared drive, project management tool, or version control repository) is crucial for transparency and collaboration, where anyone, even new team members, can understand the context and details of performance tests conducted on the past, and understand how to plan future ones.

Need to plan for a performance test? Use this template to get started: Download the Performance Test Plan Template.

General Information

- Project/Change Name:

- Request Date:

- Requesting Team:

- Technical Lead:

- Brief Description of the Change: (e.g., Implementation of a new search algorithm in the properties API to improve efficiency).

WHO: Responsibilities and Ownership

- Test Owner: (Name and role of the individual or team responsible for defining, executing, and analyzing the test).

- Backup Resource: (Name and role of the individual or team who can act as backup if the primary owner is unavailable).

- Results Validator: (Name and role of the individual or team responsible for reviewing and approving test results).

WHY: The Rationale for Testing

This section explains the primary driver for the test. Understanding the reason is crucial for context and for identifying potential impacts on other teams or systems.

- Primary Driver: (e.g., A new feature launch, preparation for a high-traffic event like Black Friday, investigating a production slowdown, or proactive validation of a recent architectural change).

- Known Dependencies: (List any other teams or applications that might be affected by this change or that this change depends on, which might warrant their own performance testing).

USE CASES: Critical Features

This section describes the real-world, end-user-facing features that this performance test should cover. This helps others understand the context and implications of the test results.

- Key Features to Test: (e.g., Property Search, Booking Process, Report Generation).

- End User Impact: (Describe how these features affect the end-user experience and why their performance is critical).

- User Scenarios: (Provide specific examples of how users interact with these features in real-world situations).

WHAT: Components to be Tested

This section identifies all system elements involved in the test.

1. Key Functionalities and Endpoints

Specify the URLs, services, or specific functions to be tested. Add as many rows as necessary.

| Endpoint / Function | HTTP Method | Brief Description |

|---|---|---|

| (Example) /api/v2/properties/search | GET | Search for vacation rentals |

2. Involved Infrastructure

Specify the infrastructure components that will support the load.

- Application Servers:

[e.g., Kubernetes Cluster 'webapp-prod'] - Databases:

[e.g., Database instance 'db-main-prod' (MongoDB)] - Cache Systems:

[e.g., Redis Cluster 'cache-prod'] - Load Balancers:

[e.g., Google Cloud Application Load Balancer] - Critical External Dependencies:

[e.g., Stripe API for payments, Auth0 for authentication]

WHEN: Test Schedule

- Planned Test Execution Date:

[e.g., 2024-07-15]

HOW: Test Methodology

This section details how the test will be configured and executed.

1. Type of Performance Test

Select the primary type of test to be performed.

- Load Test: Validate performance under normal and expected peak workload.

- Stress Test: Identify the system’s breaking point by increasing the load beyond the expected maximum.

- Endurance Test: Evaluate system stability under a sustained load for a long period.

2. Data Preparation

Is specific data needed to run the test realistically?

- No, specific data is not required.

- Yes. Describe the requirements below:

(Example: 10,000 active users with previous bookings are needed. The data will be obtained by anonymizing a production sample and loading it into the staging environment).

3. Authentication and Authorization

How will the testing tool authenticate with the services?

- Endpoints are public (no authentication).

- Access Token (JWT, API Key): A static token generated for the test will be used.

- User Credentials: A pool of test users will be used.

- Other: (Specify the method)

4. Tools

- Test Execution Tool:

[e.g., JMeter, Gatling, k6] - Monitoring Tool:

[e.g., Grafana, Datadog, New Relic]

5. Monitoring and Metrics Collection

Describe how the performance test results will be monitored and collected. This is crucial for understanding the system’s behavior under load, especially when asynchronous processes, queues, or background jobs are involved.

HOW MUCH: Load and Acceptance Criteria

This section defines the quantitative metrics for the test’s success.

1. Load Profile

Describe the load scenarios to be simulated.

[ ] Scenario 1: Normal Load

- Description: Simulates the average activity of a business day.

- Concurrent Users:

[e.g., 1,000] - Requests per Second (TPS):

[e.g., 500] - Duration:

[e.g., 30 minutes]

[ ] Scenario 2: Peak Load

- Description: Simulates the activity of a high-demand day (e.g., start of a holiday weekend).

- Concurrent Users:

[e.g., 5,000] - Requests per Second (TPS):

[e.g., 2,500] - Duration:

[e.g., 1 hour]

2. Traffic Distribution per Endpoint

Define what percentage of the total requests will go to each endpoint in each scenario.

| Endpoint | Normal Load (%) | Peak Load (%) |

|---|---|---|

| (Example) /api/v2/properties/search | 80% | 90% |

3. Acceptance Criteria (SLOs)

The test will be considered PASSED if the following conditions are met during the execution of the Peak Load scenario.

| Metric | Acceptable Threshold |

|---|---|

| Response Time (95th percentile) | Less than [e.g., 800] ms |

| Error Rate | Less than [e.g., 0.5] % |

| CPU Usage (Servers) | Less than [e.g., 80] % |

| Memory Usage (Servers) | Less than [e.g., 85] % |

RESULTS: Post-Test Documentation and Analysis

Once the test is completed, the results should be documented and compared to the defined SLOs. Any deviations should be investigated and addressed, and a determination should be made as to whether the test was successful, unsuccessful, or if retesting is required.

- Test Results: (Attach a detailed report of the results, including graphs and analysis).

- SLO Compliance: (Indicate whether all defined SLOs were met. If not, provide details on deviations and possible causes).

- Recommended Actions: (List any necessary corrective or follow-up actions based on the test results).

- Final Test Status: (Select one)

- Passed

- Passed with Remarks

- Failed - Requires Corrective Action

- Requires Retest